Everybody loves Azure Functions.

My team recently deployed a production service using Azure Functions as the back end backbone. I’d like to share some lessons and tips we learned along the way.

We’re using Azure functions in consumption plan – which basically means the platform scales in and out as required without our intervention. But that doesn’t mean you can just forget about scaling.

Monitor! Monitor! Monitor!

Azure Functions has a really great integration with App Insights. It makes it really easy to get near real-time data on whats going on in your app.

Coupled with Log Analytics, this is extremely valuable to get going right from the beginning. Skip this step at your own peril..

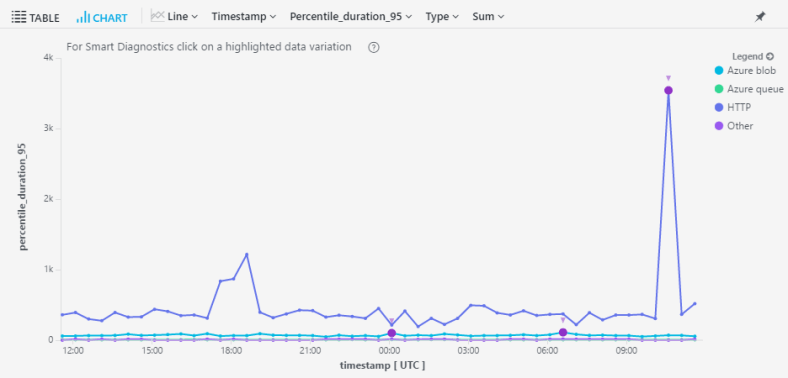

Here’s a little taster of what you can get – a very useful query that’ll get a feel for your app performance – 95th percentile request duration by request name:

requests

| where timestamp > ago(7d)

| summarize percentile(duration, 95) by name, bin(timestamp, 1h)

| render timechart

You Gotta Have Context

We’re using App Insights as our complete monitoring platform – meaning we’re calling App Insights from the Function code itself – we use it to trace logs, events and dependencies.

So if all your application monitoring data is in App Insights, it’s super-duper useful to be able to correlate all the telemetry from one request (request, traces, dependencies, events) under one context.

AF App Insights integration already sets the operation_Id field in all the requests to the context invocation Id. What we did, is set the operation Id for *all* telemetry items. You can’t really use a telmetry initializer because you don’t really control the telemetry client instance. Here’s what we did instead – store the context, and then out it in every telemetry item:

public class ApplicationInsightsTracer

{

private static readonly Lazy TelemetryClient = new

Lazy(InitTelemetryClient);

public string OperationId { get; set; }

private static TelemetryClient InitTelemetryClient()

{

var telemetryClient = new

TelemetryClient(TelemetryConfiguration.Active)

{

InstrumentationKey = ConfigurationManager.AppSettings

["APPINSIGHTS_INSTRUMENTATIONKEY"]

};

return telemetryClient;

}

}

public ApplicationInsightsTracer(Guid contextInvocationId)

{

this.OperationId = contextInvocationId.ToString();

}

public void TrackEvent(string name)

{

var eventTelemetry = new EventTelemetry(name);

telemetry.Context.Operation.Id = OperationId;

TelemetryClient.Value.TrackEvent(eventTelemetry);

}

}

Then, in the function code:

[FunctionName("MyFunc")]

public static async Task Run(

[HttpTrigger(AuthorizationLevel.Function, "post", Route = "My")] HttpRequestMessage req,

TraceWriter log,

ExecutionContext context)

{

var tracer = new ApplicationInsightsTracer(context.InvocationId);

...

}

Also – make sure you *don’t* Flush in your function code. In our tests it added about 200ms to every function invocation. Flushes happen periodically on their own.

Roles Matter

Our service has several different roles in it:

- A high-usage HTTP API which is utilized with very high concurrency.

- A job scheduling HTTP API which get called about once an hour.

- A service-bus queue based worker role that does long, heavy data crunching.

At first, when we just got started with Azure Functions, we just shoved all these functions into one Azure Functions resource. Wrong!

When you put them all together, they scale together! so whenever the long drawn processing would scale to more roles, it would scale the Http roles too and adversely affect their performance.

Different roles, with different scaling requirements, should be separated into separate Azure Functions resources.

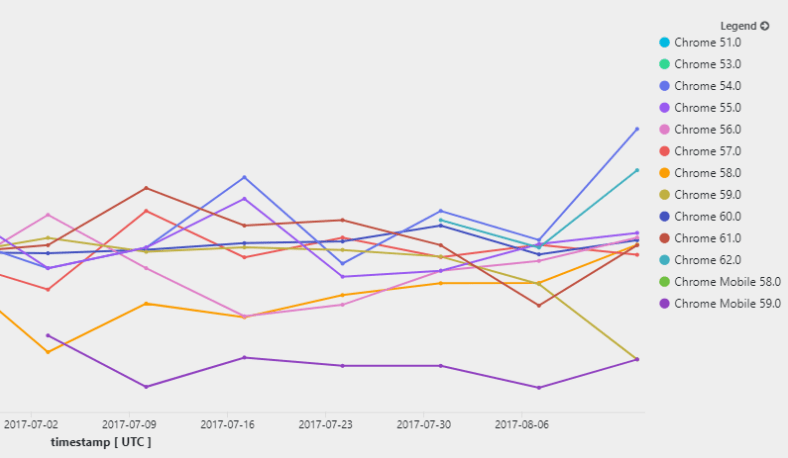

If you’ve got App Insights integration setup, here is a query that we used a lot to help us understand what exactly is scaling in our service – a distinct count of role instances per hour in our deployment:

requests

| where timestamp> ago(7d)

| summarize dcount(cloud_RoleInstance)

by bin(timestamp, 1h), cloud_RoleName

| render timechart

Different roles should also have different properties – things like the client affinity cookie should be disabled/enabled on a per role basis.